-

Notifications

You must be signed in to change notification settings - Fork 10

Meeting Notes (2020 06 23)

Alex McLain edited this page Jun 25, 2020

·

3 revisions

- Spike at: https://github.com/doughsay/rgb_matrix_simulator

- Screenshot

- It's diverging off the path from Xebow and needs to be brought back in.

- Effects can now have their own properties, and the LiveView app can help configure those properties.

- Specified with the

fieldmacro in anEffectmodule.

- Specified with the

- The simulator could be served from the Xebow and used to configure it.

- Daniel's original idea was an education platform and a framework for learning to build hardware projects.

- Lately we've focusing a lot on turning it into a mechanical keyboard (Chris).

- We need a unified way of describing keyboard layouts for all of these use cases.

- Xebow becomes the packaging for running a mechanical keyboard on this specific platform (as well as other projects). The mechanical keyboard work in general could be abstracted to other libraries so it works across other hardware (i.e. full-size keyboard).

- Xebow needs more libraries to be solidified, then it becomes a playground.

- We need a way to have a faster development feedback cycle when working on a LiveView app that runs on the hardware.

- Do we need a full simulator for this? And how difficult would it be?

- Currently made up with

KeyWithLEDstructs.- The order of this list is {y, x} (not {x, y}) due to the way the LEDs are arranged on the Keybow's SPI bus. We should probably use {x, y} and push the translation closer to the SPI GenServer.

- Should we have a layout library?

- When keys and LEDs are coupled together, we may be limited when it comes to rendering to LEDs that are not associated with keys, like LEDs along the perimeter of a keyboard (Chris has a keyboard that's an example of this).

- And some keys like spacebar may contain more than one LED.

- What if we had two separate layouts that stack on top of each other:

- A keys layout

- An LEDs layout

- Key presses (or anything else) could send {x, y} coordinates in an event to the LEDs layer of where an event happened. This decouples the concept of keys from LEDs.

- This could also include events from proximity sensors or an event generated by the application.

- Would we have to reverse how rendering works if we did this? Rather than rendering on to a pixel, does a pixel need to inquire what its color should be?

- Otherwise would we have to interpolate pixels from [inaccurate?] positions based on a frame buffer? Interpolation doesn't sound ideal.

- How did QMK do this?

- If we do this, how difficult would it be to map the physical LED matrix to the virtual layout? Especially for a normal keyboard where the LEDs will probably zig-zag due to the key pattern.

-

Keyboard layout editor

- This editor also has their own format they use to describe layout.

- QMK has "frame buffer effects"

- They don't seem to work right on some keyboards

- Used to generate the heatmap effect: The more you type on a spot, the "hotter" it gets and it bleeds outward.

- Do we have a better way to do this with opaque state in

Animationand receiving & tracking event coordinates?

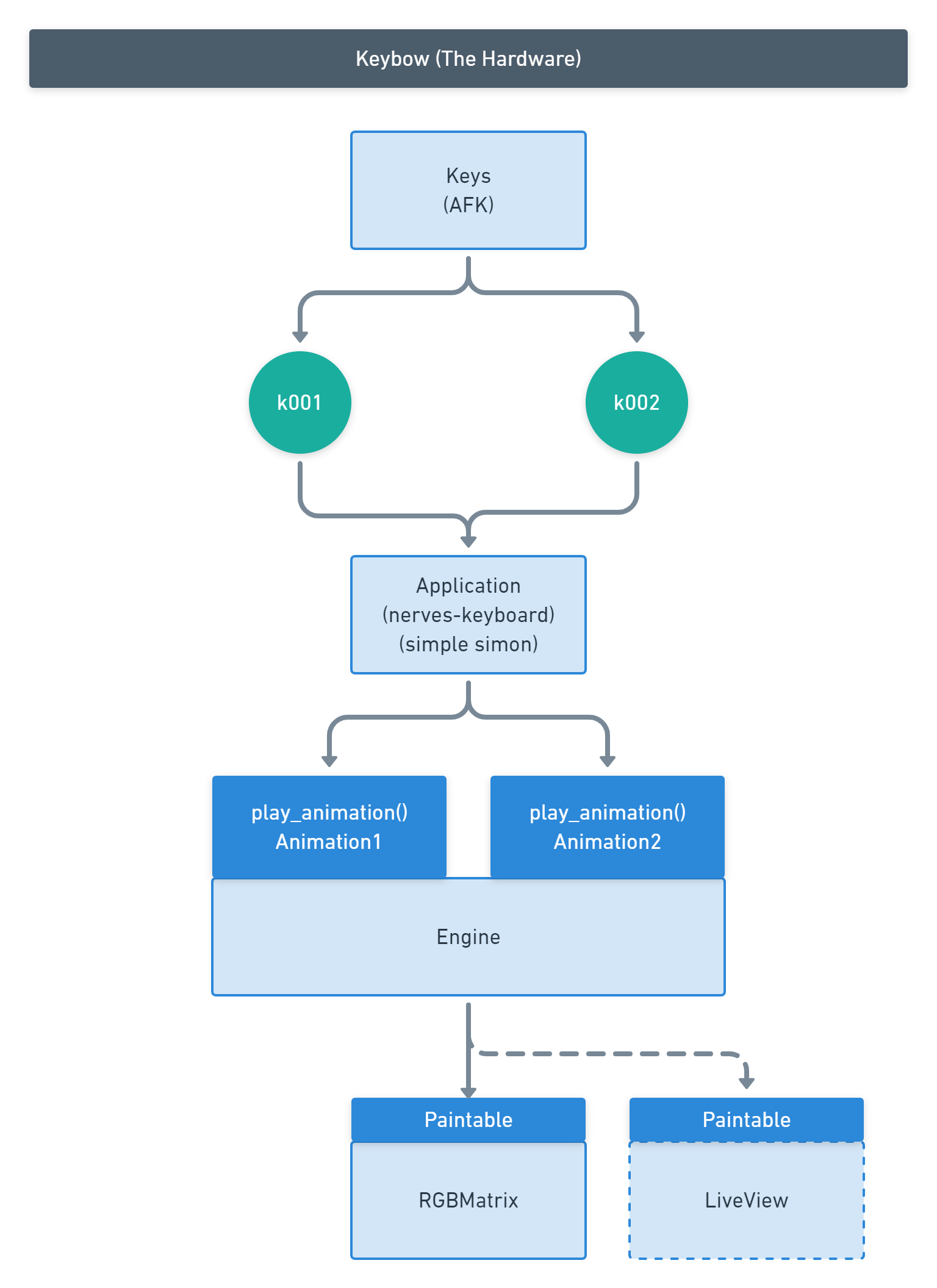

PR #33 - Refactor: Separate the render engine from the hardware

- Out of scope, but important stuff:

- The engine still needs to be able to play multiple animations, and suspend/stop animations.

- Cycling to previous & next animations should probably be the application's responsibility rather than the engine's.

-

Enginehas been introduced, which is responsible for rendering an animation. This includes drawing each frame of an animation and outputting it at the right time interval. - The engine can send to multiple outputs now.

- Outputs implement the

Paintablebehaviour, which means the module has a function the engine can call to push a frame of pixels to it (i.e. LiveView doesn't have to worry about animation timing for each socket). - A

Paintablemodule is responsible for translating the frame of pixels to the physical hardware.

- Xebow could run multiple applications (i.e. you could shut down nerves-keyboard and run Simple Simon on the same hardware).

- Maybe Xebow could utilize

Applicationto start/stop these apps on the Keybow hardware.- Can they run as "extra_applications"?

- What if Xebow exposed a pub/sub mechanism [for key presses] for the apps to use?

-

keys.ex - Serves as an interface that maps physical pins to a key map and state changes.

- AFK stores keyboard state.

- Architecture diagram

- We've discussed this before (2020-04-07) and decided to defer it so far due to the complexity.

- Wacom tablets do this, for example.

- Would need to write an application that runs on the host that can send data back to the Keybow.

- Xebow's USB is configured in

hid_gadget.ex - USB HID Descriptor Tool

- pid.codes - Registry of USB PID codes for open source hardware.

- Every USB device has to have a vendor ID and product ID.

- A USB device can expose multiple endpoints (network, serial port, keyboard, etc.)

- USB HID devices - Standardized devices that you don't need a unique vendor ID for.

- Chris: Work on merging the simulator spike back into the Xebow repo.

- Alex: Update PR #33 to allow for registering and unregistering

Paintables at runtime. - Alex + Nick: Pair up to fix the double key press issue (#32).

- LiveView simulator spike

- QMK LED layout

- Keyboard layout editor

- Plate & case builder

- Massdrop configurator

- AFK

- USB HID Descriptor Tool

- pid.codes - Registry of USB PID codes for open source hardware.

- USB developer info