-

Notifications

You must be signed in to change notification settings - Fork 1.8k

Using LlamaChat Interface

LlamaChat: https://github.com/alexrozanski/LlamaChat

LlamaChat provides an interactive graphical interface for LLaMA-like models on macOS systems. The following instructions demonstrate the setup process using the Chinese Alpaca 7B model as an example.

Choose the latest .dmg file.

Link: https://github.com/alexrozanski/LlamaChat/releases

Simply drag LlamaChat into the Applications folder (or any other folder).

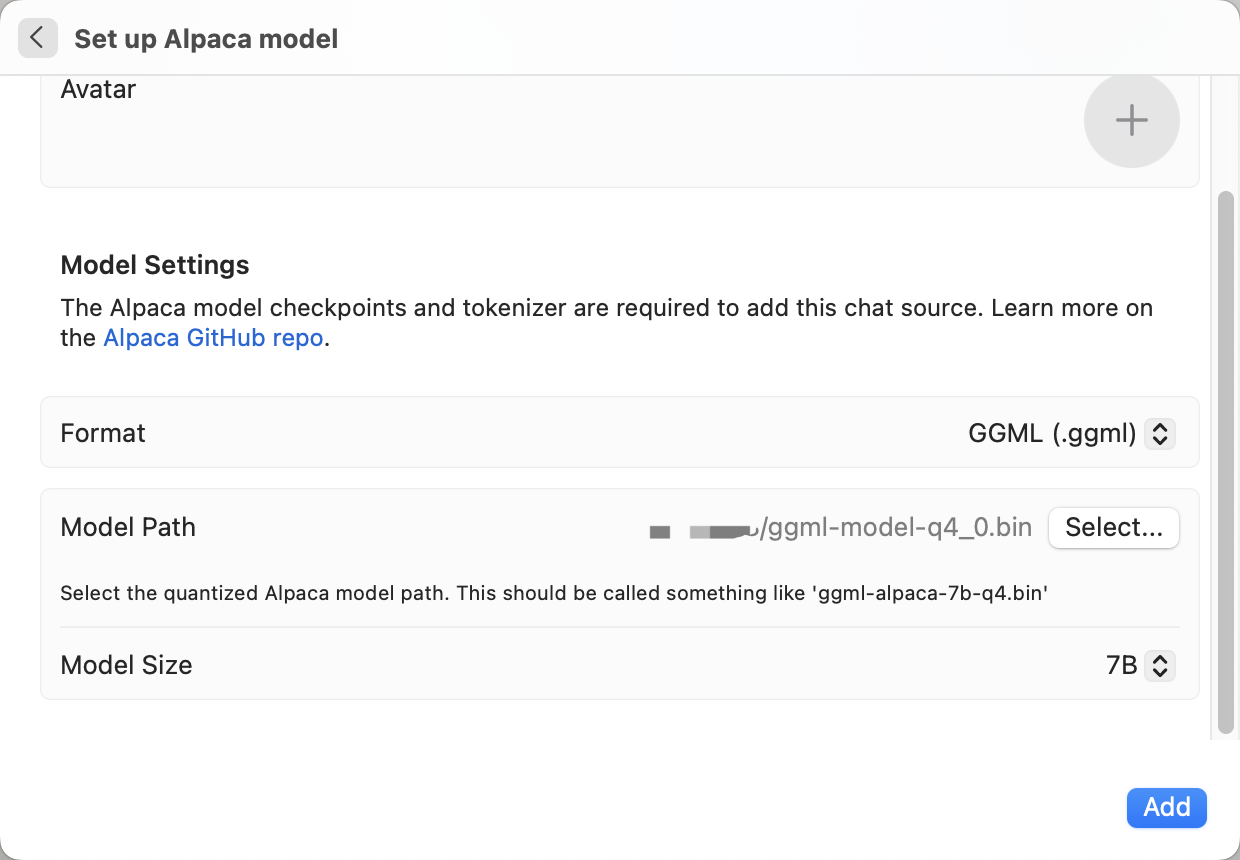

Follow the wizard to configure the model. In this example, choose the Alpaca model.

Name the model, choose an avatar, and select the appropriate format from the Format dropdown list: PyTorch format (.pth extension) or GGML format (.bin extension). Specify the model path and model size. The GGML format is the model format obtained from converting with llama.cpp. For more information, refer to llama.cpp conversion.

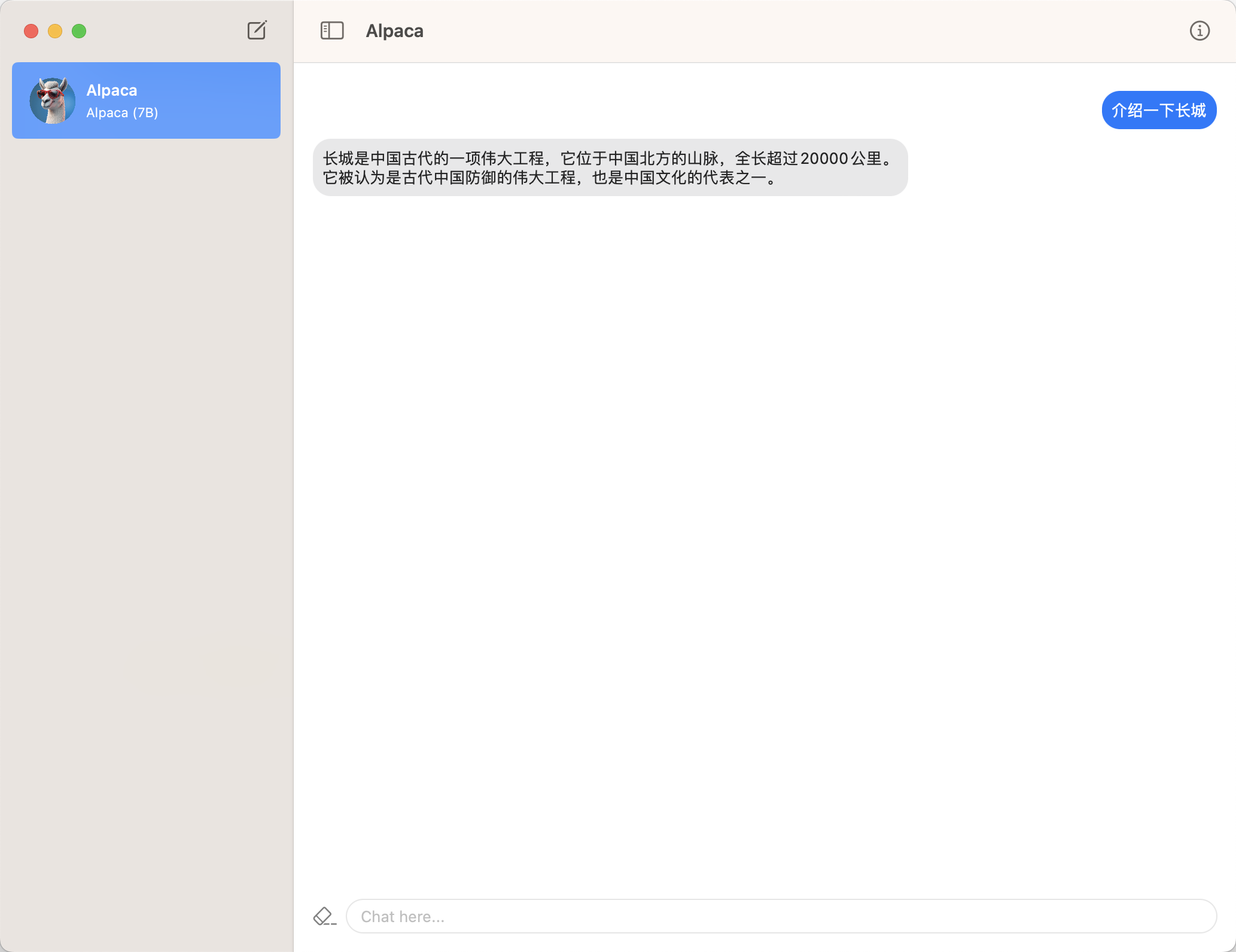

After successfully adding the model, you can interact with it. Click on the icon to the left of the chat box to end the current round of conversation (this clears the context cache but does not delete the corresponding chat history).

(Note: Loading the model is necessary for generating the first sentence, but the speed will return to normal afterward.)

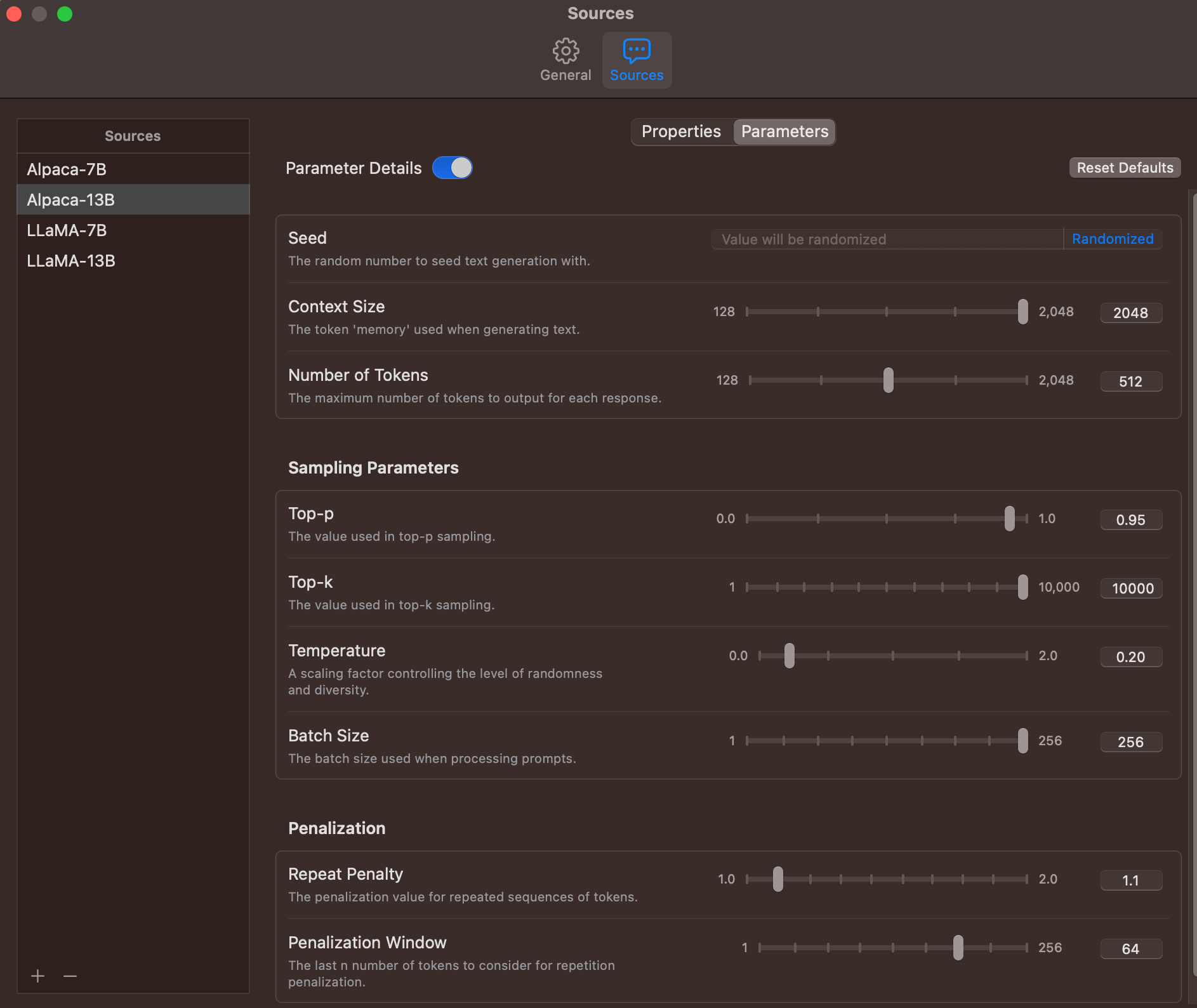

LlamaChat v1.2 introduces customized settings for inference. Take a look and try.

- 模型合并与转换

- 模型量化、推理、部署

- 效果与评测

- 训练细节

- 常见问题

- Model Reconstruction

- Model Quantization, Inference and Deployment

- System Performance

- Training Details

- FAQ