This repository has been archived by the owner on Nov 3, 2022. It is now read-only.

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

- What I did

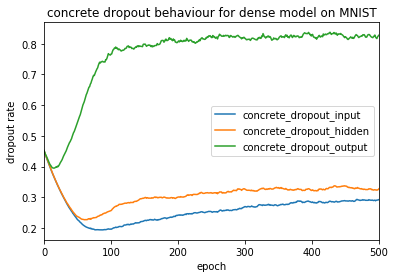

Implemented the Concrete Dropout paper w.r.t. yaringal's implementation (determines the optimal dropout rate on its own).

- How I did it

Used a keras wrapper. Modifying the keras Dense/Conv2d-Layers itself would result in more elegant code, but this version should work as well.

- How you can verify it

With the attached test file and with the following example call:

The dropout rate should converge to respecively

0.29,0.33and0.82for each layer in above example as visualized in the following plot: