Detailed information about this framework can be found in [1, 2]. Plus, the code for each module is well-documented.

| Package | Version (>=) |

|---|---|

| Python | 3.8 |

| NumPy | 1.22.0 |

| SciPy | 1.5.0 |

| matplotlib | 3.2.2 |

| tqdm | 4.47.0 |

| pandas | 1.5.3 |

| scikit-learn | 1.2.2 |

| TensorFlow* | 2.8.0 |

*For Mac M1/M2, one may need to install TensorFlow via conda such as:

conda install -c apple tensorflow-depsFurther information can be found at Install TensorFlow on Mac M1/M2 with GPU support by D. Ganzaroli.

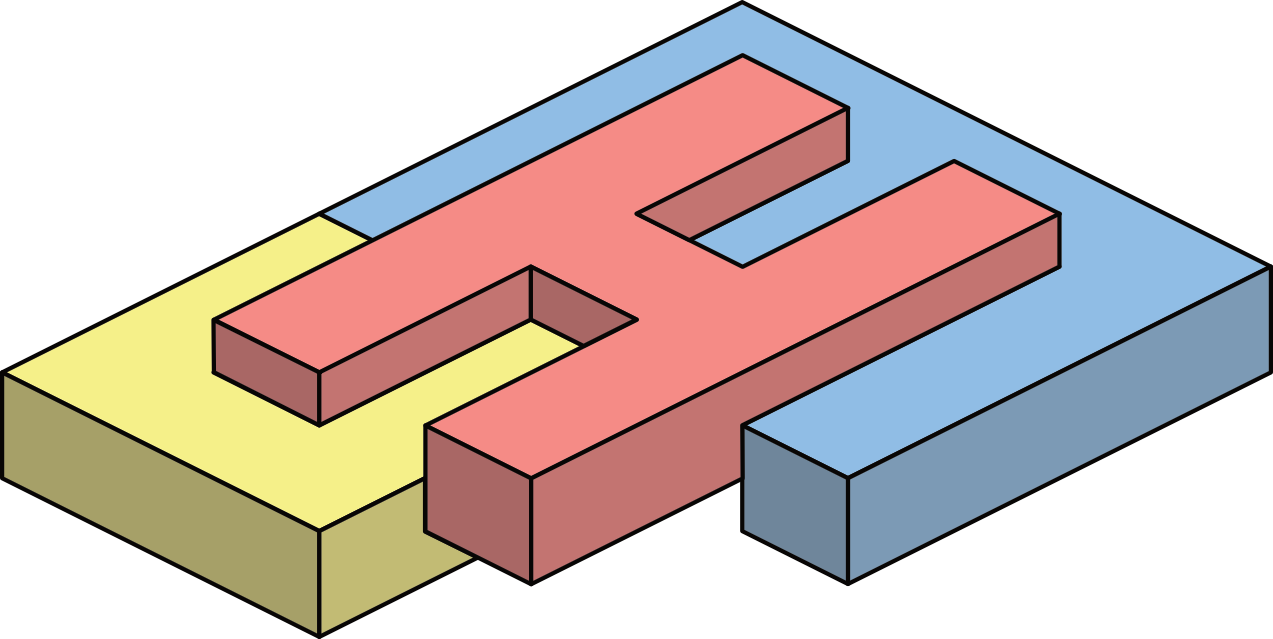

The modules that comprise this framework depend on some basic Python packages, as well as they liaise each other. The module dependency diagram is presented as follows:

NOTE: Each module is briefly described below. If you require further information, please check the corresponding source code.

This module includes several benchmark functions as classes to be solved by using optimisation techniques. The class structure is based on Keita Tomochika's repository optimization-evaluation.

Source: benchmark_func.py

This module contains the class Population. A Population object corresponds to a set of agents or individuals within a problem domain. These agents themselves do not explore the function landscape, but they know when to update the position according to a selection procedure.

Source: population.py

This module has a collection of search operators (simple heuristics) extracted from several well-known metaheuristics in the literature. Such operators work over a population, i.e., modify the individuals' positions.

Source: operators.py

This module contains the Metaheuristic class. A metaheuristic object implements a set of search operators to guide a population in a search procedure within an optimisation problem.

Source: metaheuristic.py

This module contains the Hyperheuristic class. Similar to the Metaheuristic class, but in this case, a collection of search operators is required. A hyper-heuristic object searches within the heuristic space to find the sequence that builds the best metaheuristic for a specific problem.

Source: hyperheuristic.py

This module contains the Experiment class. An experiment object can run several hyper-heuristic procedures for a list of optimisation problems.

Source: experiment.py

This module contains several functions and methods utilised by many modules in this package.

Source: tools.py

This module contains the implementation of Machine Learning models which can power a hyper-heuristic model from this framework. In particular, it is implemented a wrapper for a Neural Network model from Tensorflow. Also, contains auxiliar data structures which process sample of sequences to generate training data for Machine Learning models.

Source: machine_learning.py

The experiments are saved in JSON files. The data structure of a saved file follows a particular scheme described below.

Expand structure

data_frame = {dict: N}

|-- 'problem' = {list: N}

| |-- 0 = {str}

: :

|-- 'dimensions' = {list: N}

| |-- 0 = {int}

: :

|-- 'results' = {list: N}

| |-- 0 = {dict: 6}

| | |-- 'iteration' = {list: M}

| | | |-- 0 = {int}

: : : :

| | |-- 'time' = {list: M}

| | | |-- 0 = {float}

: : : :

| | |-- 'performance' = {list: M}

| | | |-- 0 = {float}

: : : :

| | |-- 'encoded_solution' = {list: M}

| | | |-- 0 = {int}

: : : :

| | |-- 'solution' = {list: M}

| | | |-- 0 = {list: C}

| | | | |-- 0 = {list: 3}

| | | | | |-- search_operator_structure

: : : : : :

| | |-- 'details' = {list: M}

| | | |-- 0 = {dict: 4}

| | | | |-- 'fitness' = {list: R}

| | | | | |-- 0 = {float}

: : : : : :

| | | | |-- 'positions' = {list: R}

| | | | | |-- 0 = {list: D}

| | | | | | |-- 0 = {float}

: : : : : : :

| | | | |-- 'historical' = {list: R}

| | | | | |-- 0 = {dict: 5}

| | | | | | |-- 'fitness' = {list: I}

| | | | | | | |-- 0 = {float}

: : : : : : : :

| | | | | | |-- 'positions' = {list: I}

| | | | | | | |-- 0 = {list: D}

| | | | | | | | |-- 0 = {float}

: : : : : : : : :

| | | | | | |-- 'centroid' = {list: I}

| | | | | | | |-- 0 = {list: D}

| | | | | | | | |-- 0 = {float}

: : : : : : : : :

| | | | | | |-- 'radius' = {list: I}

| | | | | | | |-- 0 = {float}

: : : : : : : :

| | | | | | |-- 'stagnation' = {list: I}

| | | | | | | |-- 0 = {int}

: : : : : : : :

| | | | |-- 'statistics' = {dict: 10}

| | | | | |-- 'nob' = {int}

| | | | | |-- 'Min' = {float}

| | | | | |-- 'Max' = {float}

| | | | | |-- 'Avg' = {float}

| | | | | |-- 'Std' = {float}

| | | | | |-- 'Skw' = {float}

| | | | | |-- 'Kur' = {float}

| | | | | |-- 'IQR' = {float}

| | | | | |-- 'Med' = {float}

| | | | | |-- 'MAD' = {float}

: : : : : :

where:

Nis the number of files within data_files folderMis the number of hyper-heuristic iterations (metaheuristic candidates)Cis the number of search operators in the metaheuristic (cardinality)Pis the number of control parameters for each search operatorRis the number of repetitions performed for each metaheuristic candidateDis the dimensionality of the problem tackled by the metaheuristic candidateIis the number of iterations performed by the metaheuristic candidatesearch_operator_structurecorresponds to[operator_name = {str}, control_parameters = {dict: P}, selector = {str}]

The following modules are available, but they may do not work. They are currently under developing.

This module intends to provide metrics for characterising the benchmark functions.

Source: characterisation.py

This module intends to provide several tools for plotting results from the experiments.

Source: visualisation.py

- J. M. Cruz-Duarte, I. Amaya, J. C. Ortiz-Bayliss, H. Terashima-Marín, and Y. Shi, CUSTOMHyS: Customising Optimisation Metaheuristics via Hyper-heuristic Search, SoftwareX, vol. 12, p. 100628, 2020.

- J. M. Cruz-Duarte, I. Amaya, J. C. Ortiz-Bayliss, S. E. Conant-Pablos, H. Terashima-Marín, H., and Y. Shi. Hyper-Heuristics to Customise Metaheuristics for Continuous Optimisation, Swarm and Evolutionary Computation, 100935.

- J. M. Cruz-Duarte, I. Amaya, J. C. Ortiz-Bayliss, S. E. Connat-Pablos, and H. Terashima-Marín, A Primary Study on Hyper-Heuristics to Customise Metaheuristics for Continuous Optimisation. CEC'2020.

- J. M. Cruz-Duarte, J. C. Ortiz-Bayliss, I. Amaya, Y. Shi, H. Terashima-Marín, and N. Pillay, Towards a Generalised Metaheuristic Model for Continuous Optimisation Problems, Mathematics, vol. 8, no. 11, p. 2046, Nov. 2020.

- J. M. Cruz-Duarte, J. C. Ortiz-Bayliss, I. Amaya, and N. Pillay, Global Optimisation through Hyper-Heuristics: Unfolding Population-Based Metaheuristics, Appl. Sci., vol. 11, no. 12, p. 5620, 2021.

- J. M. Cruz-Duarte, I. Amaya, J. C. Ortiz-Bayliss, N. Pillay. Automated Design of Unfolded Metaheuristics and the Effect of Population Size. 2021 IEEE Congress on Evolutionary Computation (CEC), 1155–1162, 2021.

- J. M. Tapia-Avitia, J. M. Cruz-Duarte, I. Amaya, J. C. Ortiz-Bayliss, H. Terashima-Marin, and N. Pillay. A Primary Study on Hyper-Heuristics Powered by Artificial Neural Networks for Customising Population-based Metaheuristics in Continuous Optimisation Problems, 2022 IEEE Congress on Evolutionary Computation (CEC), 2022.

- J. M. Cruz-Duarte, I. Amaya, J. C. Ortiz-Bayliss, N. Pillay. A Transfer Learning Hyper-heuristic Approach for Automatic Tailoring of Unfolded Population-based Metaheuristics, 2022 IEEE Congress on Evolutionary Computation (CEC), 2022.