Talking Head videos of your favorite rapper rapping about anything. Using open-source NLP and TTS libraries.

- Input a prompt, reference audio, reference photo

- Output auto-generated rap lyrics in style of rapper, synthesized audio using cloned voice, and Talking Head video.

-

Intelligent text (lyrics): input a prompt and harness state-of-the-art LLMs to craft creative and engaging rap verses.

-

Synthetic audio (voice): a text-to-speech (TTS) system to clone a voice based on audio sample and feed it generated lyrics.

-

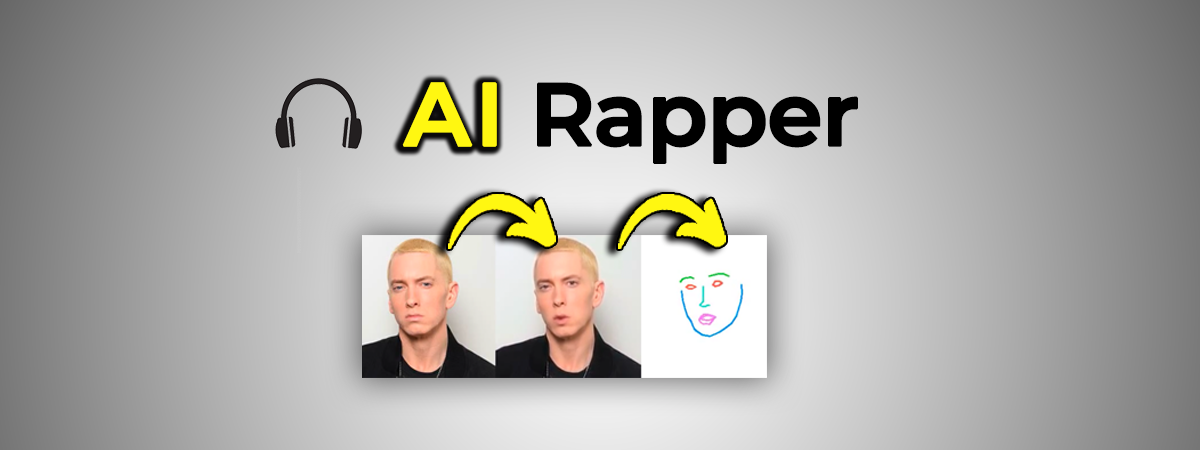

Talking Head (video): input a reference image and cobine with generated audio to create a realistic, engaging talking head.

- Clone MakeItTalk (for video generation)

https://github.com/adobe-research/MakeItTalk/into root directory ofai-rapper - Add a strictly 256 x 256 image of rapper in

MakeItTalk/examples. Face should be clear and un-obstructed. Ex:MakeItTalk/examples/eminem.png - Add an audio .wav file ( ~ 10-30 sec) of rapper in a separate directory of

audio_samplesi.e.audio_samples/eminem_00.wav

pip install -r requirements.txt

python src/app.py

Look for generated video in MakeItTalk/examples:

/tmp/tmpx_swo6p1eminem_00.wav

/tmp/tmp7zx0u65zem.png

Audio-----> tmpx_swo6p1eminem_00.wav

Parameters===== tmpx_swo6p1eminem_00.wav 48000 [-29 -36 -43 ... 120 125 124]

Loaded the voice encoder model on cuda in 0.04 seconds.

Processing audio file tmpx_swo6p1eminem_00.wav

Loaded the voice encoder model on cuda in 0.03 seconds.

source shape: torch.Size([1, 576, 80]) torch.Size([1, 256]) torch.Size([1, 256]) torch.Size([1, 576, 257])

converted shape: torch.Size([1, 576, 80]) torch.Size([1, 1152])

Run on device: cuda

======== LOAD PRETRAINED FACE ID MODEL examples/ckpt/ckpt_speaker_branch.pth =========

....

....

....

====================================

z = torch.tensor(torch.zeros(aus.shape[0], 128), requires_grad=False, dtype=torch.float).to(device)

OpenCV: FFMPEG: fallback to use tag 0x7634706d/'mp4v'

examples/tmpx_swo6p1eminem_00.wav

ffmpeg version 4.4.2-0ubuntu0.22.04.1 Copyright (c) 2000-2021 the FFmpeg developers

....

....

....

OpenCV: FFMPEG: tag 0x67706a6d/'mjpg' is not supported with codec id 7 and format 'mp4 / MP4 (MPEG-4 Part 14)'

OpenCV: FFMPEG: fallback to use tag 0x7634706d/'mp4v'

Time - ffmpeg add audio: 15.704241514205933

finish image2image gen

examples/test_pred_fls_tmpx_swo6p1eminem_00_audio_embed.mp4

HuggingFace Transformers libary

- Harnesses fine-tuned and pre-trained language models for rap lyric generation

AutoModelForCausalLMgenerates text by predicting the next word based on previous ones, not on the ones that follow. Useful for speciifc creative tasks such as generating rap lyrics, which rely on stylistic model outputs that have been trained on vast amounts of diverse text data (thus enabling it to generate coherent and contextually relevant text based on a given user prompt)AutoTokenizerefficiently tokenizes input prompts, enabling seamless integration with LLMs.DistilGPT2(a distilled, more efficient version of GPT-2) efficiently handles this. See usage insrc/text_generation/text_generator.py

Tortoise TTS

- Used for synthesizing audio from text

- Supports custom voice models to mimic specific rappers' voices

CUDA Toolkit

- Trained Eminem's voice (as in the example) on a custom TTS model.

- NVIDIA's CUDA Toolkit used to accelerate GPU training.

PyTorch Audio

torchaudiolibrary handles audio data, saving synthesized rap audio in*.wavformat

MakeItTalk

- Open-source Github repo used for video synthesis, harnessing OpenCV and FFMPEG

- Demo:

https://github.com/yzhou359/MakeItTalk/blob/main/quick_demo_tdlr.ipynb

OpenCV

- used to segment facial features in input image and lip-sync to audio

FFMPEG

- Used to handle smooth, compatible audio + video synthesis